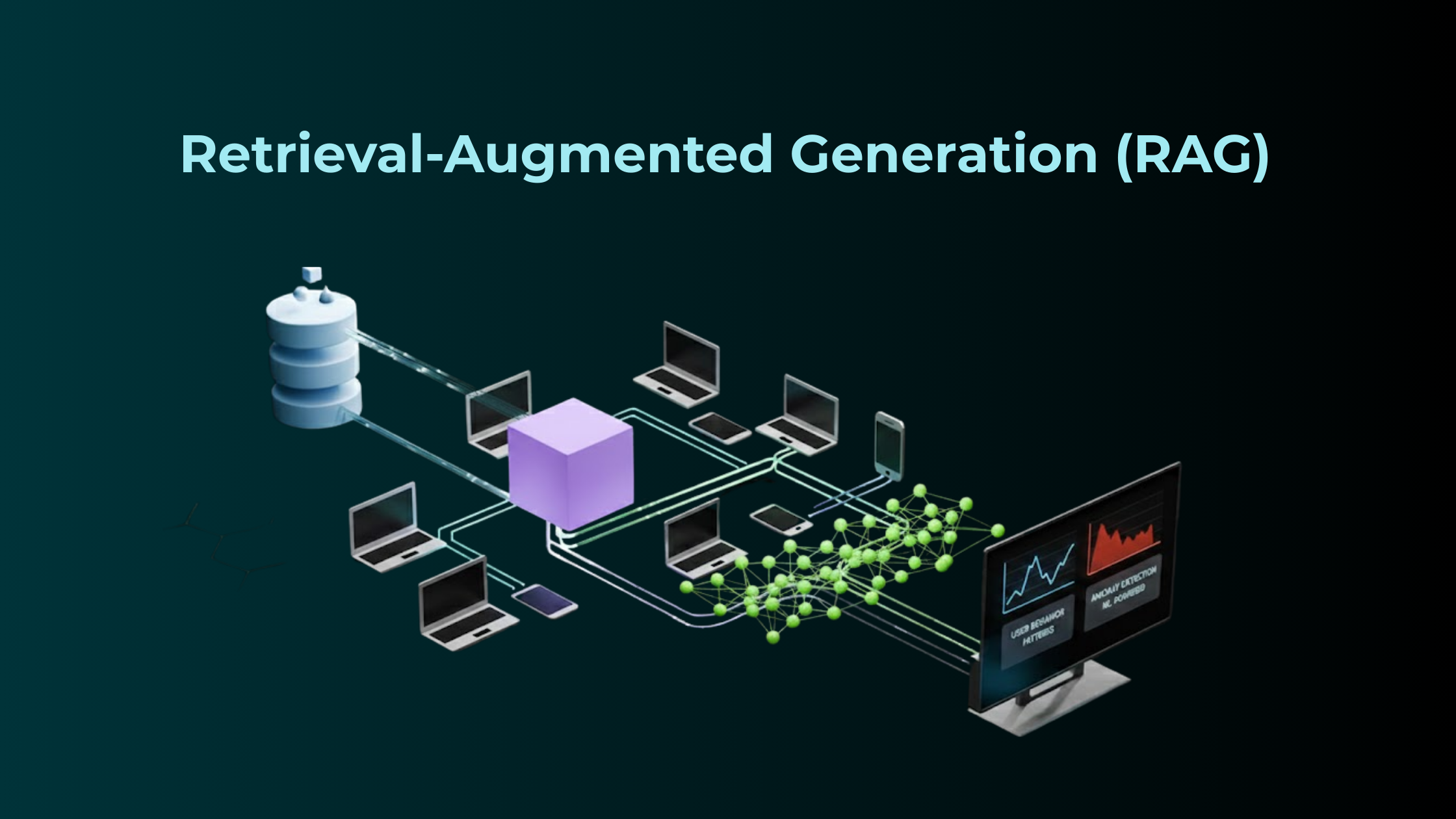

What is Retrieval-Augmented Generation (RAG)?

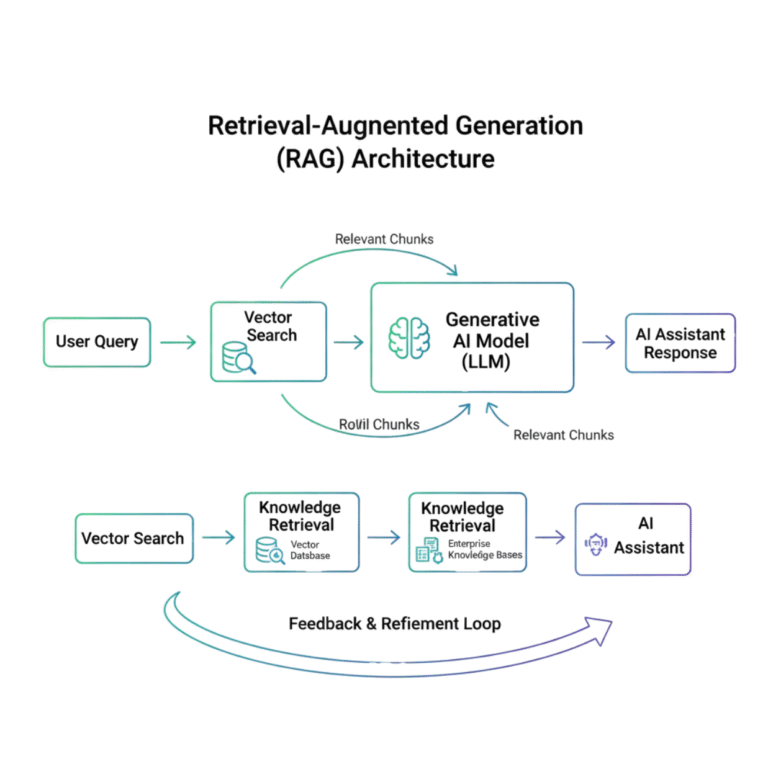

Retrieval-Augmented Generation (RAG) is an approach that enhances AI systems by combining real-time information retrieval with generative models. Instead of relying only on what a model learned during training, RAG allows it to search external knowledge sources—such as databases, documents, or internal enterprise systems—before generating a response.

In simple terms, RAG gives AI access to a live knowledge base. When a question is asked, the system retrieves relevant information from trusted sources and then uses that context to produce a more accurate and grounded answer. This bridges the gap between static training data and constantly evolving real-world information.

Unlike traditional AI models that operate with a fixed knowledge cutoff, RAG-enabled systems stay context-aware and up to date. The process mirrors how humans research: we look up reliable sources before responding, rather than depending only on memory.

The Limitation of Traditional AI

Before RAG, large language models could only generate responses based on their training data. This created several issues. Their knowledge became outdated over time, especially in fast-changing domains like finance, healthcare, or technology. They also lacked access to proprietary or domain-specific information unique to an organization.

As a result, AI systems sometimes produced outdated responses or filled knowledge gaps with plausible but incorrect information—commonly referred to as hallucinations.

How RAG Improves Accuracy

RAG significantly improves accuracy by grounding responses in retrieved, verified content. Because the system must first pull relevant information before generating an answer, responses become more precise, current, and context-aware.

This is especially valuable in enterprise environments. For example, a customer support assistant can reference live policy documents or updated pricing data instead of relying on outdated training knowledge. Similarly, healthcare systems can access the latest research and treatment guidelines, ensuring recommendations are aligned with current standards.

By anchoring outputs to factual sources, RAG reduces guesswork and improves response reliability.

Reducing AI Hallucinations

AI hallucinations occur when a model generates information that sounds correct but is factually wrong. This often happens when the model lacks sufficient knowledge yet attempts to provide a complete answer.

RAG mitigates this issue by requiring the system to retrieve real, relevant data before generating a response. This retrieval step acts as a grounding mechanism, making it far less likely for the model to fabricate details. Additionally, RAG systems can provide source attribution, increasing transparency and trust.

By connecting answers to verifiable content, RAG transforms AI from a purely generative system into a knowledge-grounded assistant.

Enterprise Knowledge Integration

Modern enterprises generate vast amounts of internal knowledge—documentation, research, customer insights, compliance guidelines, and operational data. However, this knowledge often exists in silos, making it difficult to access efficiently.

RAG solves this by acting as a unified interface across multiple information sources. Instead of manually searching different systems, employees can query a single AI assistant that retrieves and synthesizes relevant information from across the organization.

Importantly, enterprise-grade RAG implementations respect existing security permissions. Users only receive information they are authorized to access, ensuring sensitive data remains protected.

When implemented thoughtfully—with well-organized data and clear governance—RAG significantly enhances productivity and knowledge sharing across teams.

Real-World Applications

RAG is transforming several industries:

In customer support, it enables chatbots and agents to access real-time policies and technical documentation, improving resolution speed and accuracy.

In healthcare, it supports clinicians by retrieving the latest medical research and clinical guidelines, strengthening evidence-based decision-making.

In legal and compliance functions, it helps professionals navigate complex regulations and case law by synthesizing relevant documents quickly.

Across industries, the common benefit is improved decision quality through better access to trustworthy information.

The Future of RAG

RAG technology continues to evolve. Emerging advancements include multimodal retrieval (working with text, images, and other formats) and real-time integration with live data streams. As retrieval mechanisms become more sophisticated, systems will not only find relevant documents but also better understand relationships between different sources.

For organizations, adopting RAG is both a technical and strategic move. It requires high-quality data, clear objectives, and ongoing refinement. However, the payoff is substantial: more reliable AI systems that truly augment human expertise.

Conclusion

Retrieval-Augmented Generation represents a major step forward in enterprise AI. By combining generative intelligence with real-time knowledge retrieval, RAG addresses key challenges such as outdated information, hallucinations, and knowledge silos.

It transforms AI from a static responder into a dynamic, trustworthy assistant—one that is grounded in facts, aligned with current information, and securely integrated with enterprise systems. As organizations increasingly rely on AI for decision-making and operations, RAG is poised to become a foundational component of modern intelligent systems.

Explore our AI/ML services below

- Connect us – https://internetsoft.com/

- Call or Whatsapp us – +1 305-735-9875

ABOUT THE AUTHOR

Abhishek Bhosale

COO, Internet Soft

Abhishek is a dynamic Chief Operations Officer with a proven track record of optimizing business processes and driving operational excellence. With a passion for strategic planning and a keen eye for efficiency, Abhishek has successfully led teams to deliver exceptional results in AI, ML, core Banking and Blockchain projects. His expertise lies in streamlining operations and fostering innovation for sustainable growth